The Problem We All Know Too Well

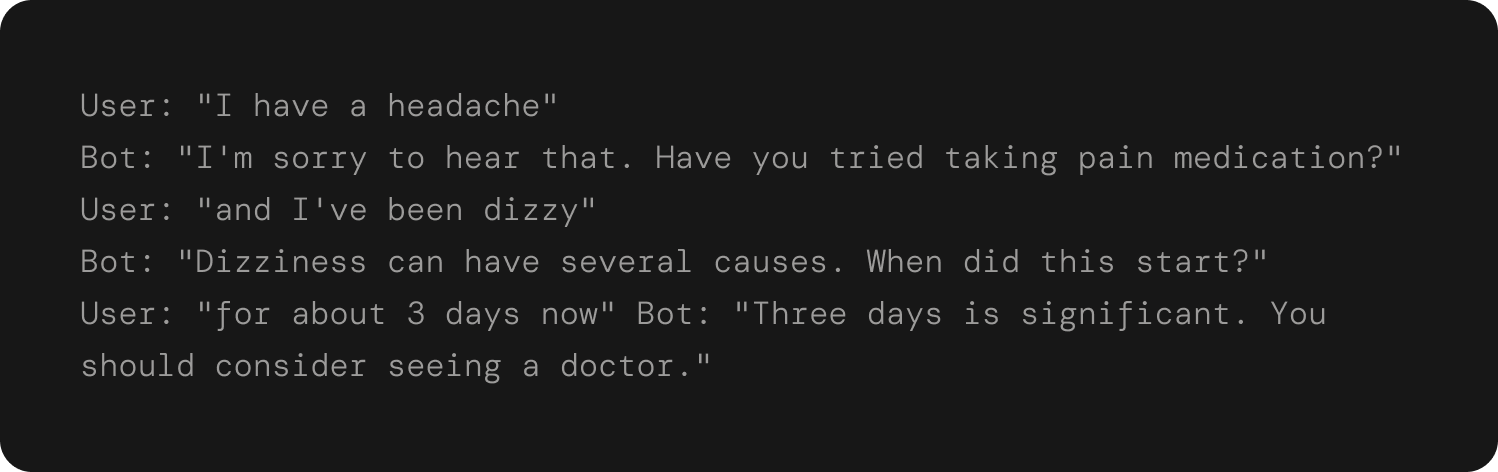

Anyone who's used a chatbot has experienced this awkward dance: you start typing a message, the bot immediately responds to your incomplete thought, then you send another message, and the bot responds again. Before you know it, you're having three different conversations at once while the bot frantically tries to keep up with your stream of consciousness.It's like talking to someone who interrupts you mid-sentence every time you pause to breathe. Real conversations don't work this way. When you're explaining something complex to a friend, they wait for you to finish your complete thought before responding. They might nod along or say "mm-hmm," but they don't jump in with advice after every sentence fragment.Here's what this looks like in practice with traditional chatbots:

Meanwhile, any human would have just waited for the complete picture before responding. The bot's eager helpfulness actually creates confusion and frustration.

Our Solution: Teaching Agents to Listen First

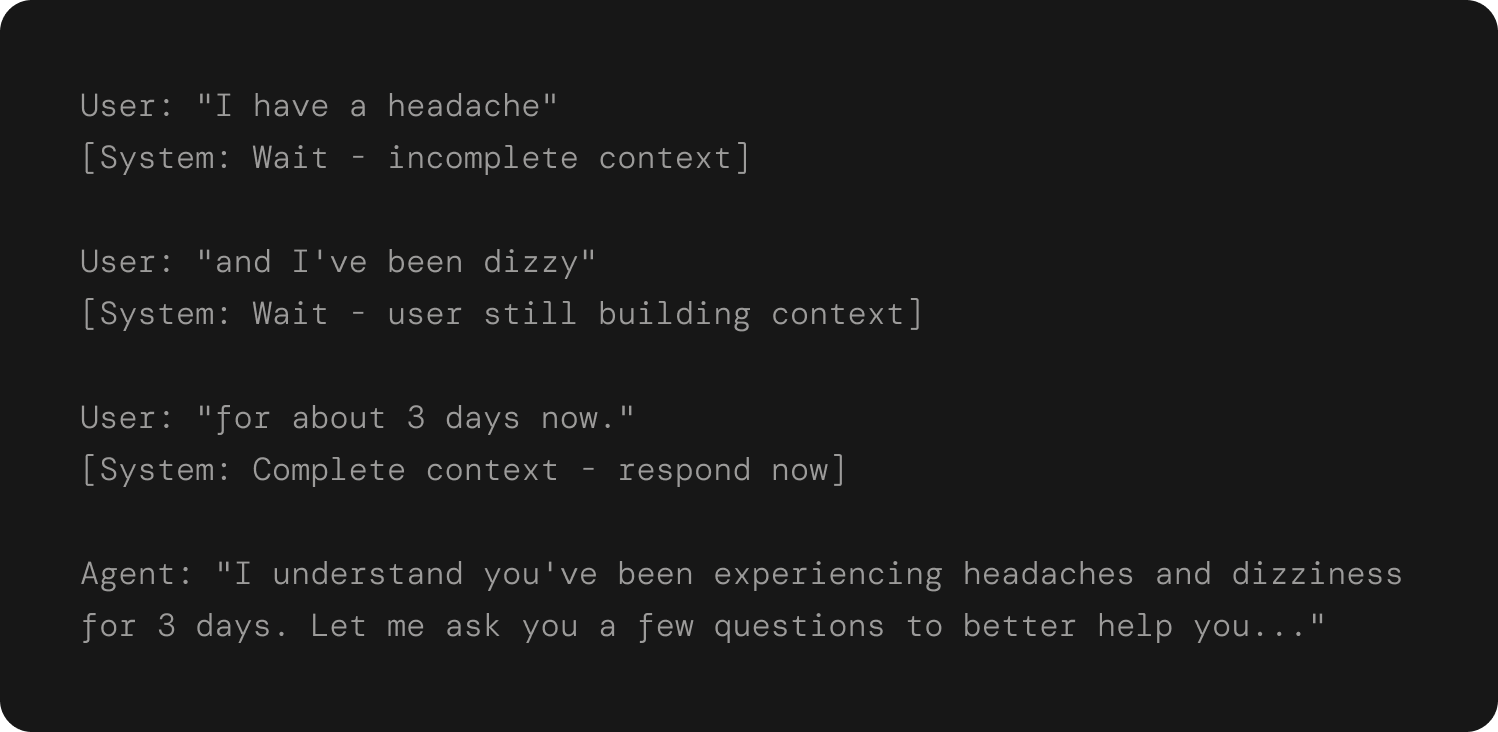

We follow what we're calling "Agent-in-the-Loop" - essentially a smart pause button for conversational AI. The core insight is simple: instead of responding to every message immediately, we added a layer that decides when the agent should actually speak up.

Think of it like having a really good listener who knows when you're still building up to your point versus when you've finished and are waiting for a response. The system watches for natural conversation cues that humans instinctively understand but that most bots completely ignore.

Some signals are obvious: a direct question mark usually means they want an immediate response. Others are more subtle, like noticing when someone sends several messages in quick succession or uses continuation words like "and" or "also" at the end of a message.

The Magic of Acknowledgment

One breakthrough came when we let our agents acknowledge that they're thinking, just like humans do. Instead of either responding instantly or staying silent, the agent can say things like "Got it, let me check my system for a second" or "I'm processing everything you've told me."

This small change transforms how users perceived the interaction. Instead of wondering if the agent was broken during those brief pauses, users felt heard and understood. They knew the agent was actively working on their problem rather than just being slow or unresponsive. Same works for read signals and for typing indicators! All these are tools to make the conversation more human, closer to our mental models of how conversations happen.

The acknowledgments also buy us time to collect more context. When someone starts with "I need help with something complicated," the agent can respond with "Of course! Take your time explaining what you need" rather than immediately asking for clarification. This creates space for users to provide complete information upfront.

Smart Interruption:

The Game ChangerThe most impactful feature we are building is the ability to interrupt and restart response generation when users provide new information. Traditional chatbots get locked into their first interpretation of a request and can't adapt when users clarify or correct themselves.

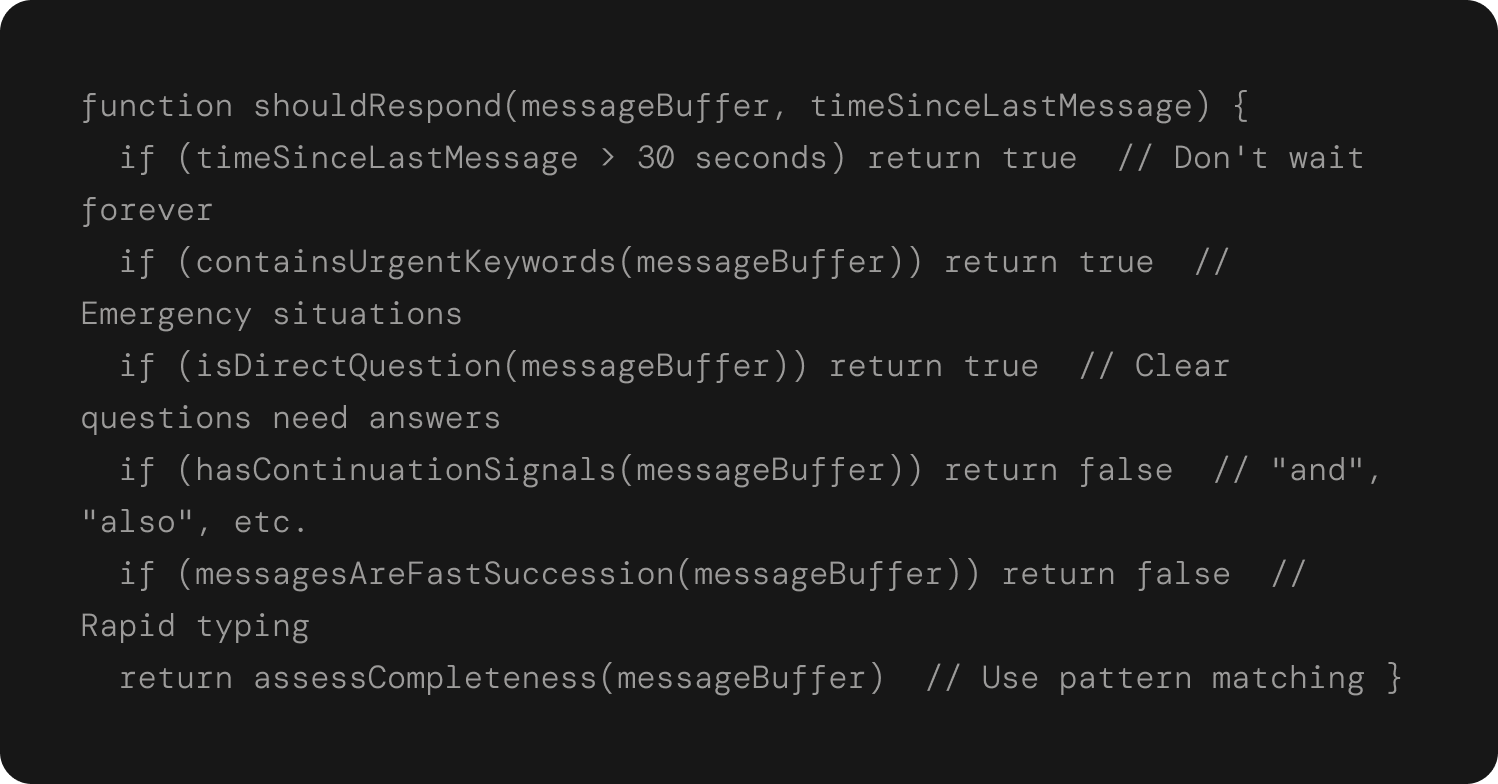

The technical implementation involves monitoring for new messages while generating responses:

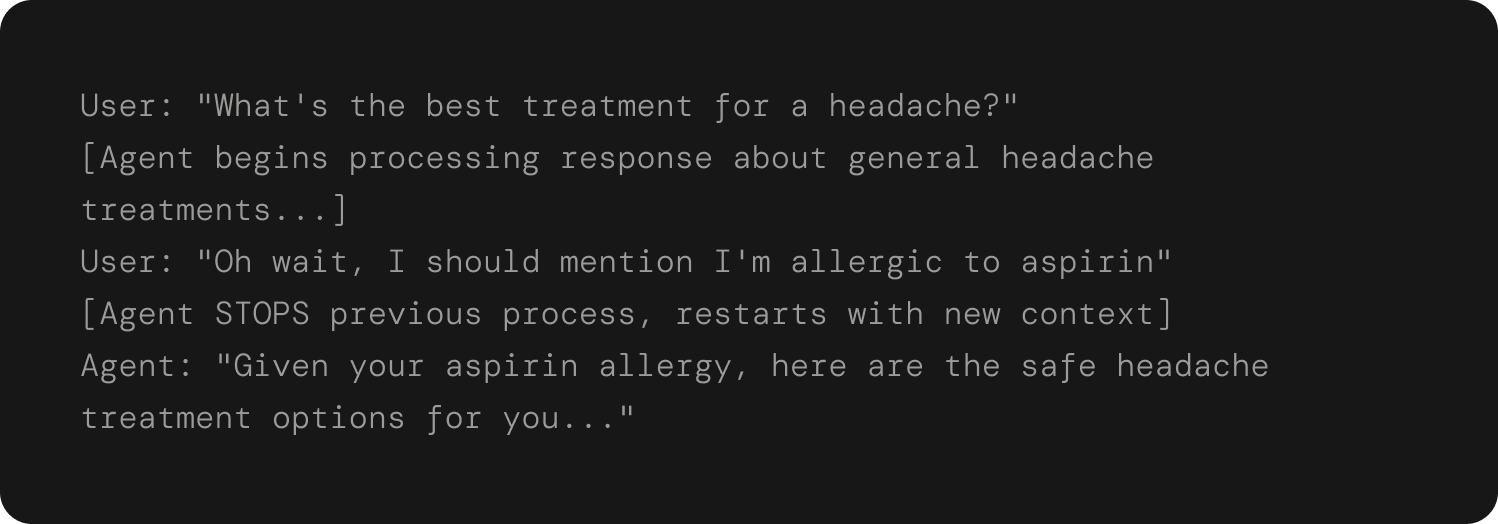

Here's a real example from our healthcare agent: A user asked "What's the best treatment for a headache?" and while the agent was generating general headache advice, the user added "Oh wait, I should mention I'm allergic to aspirin." Our system immediately stopped generating the original response and started over with the allergy information included.

Without this feature, the agent would have processed the wrong medication request, sent that response, then had to awkwardly backtrack when the user corrected themselves.

Lessons from Implementation

Building this taught us that conversation flow is more about psychology than technology. The technical implementation is relatively straightforward - pattern matching on message content and timing, plus some basic state management. The hard part was understanding human conversation patterns well enough to recognize when someone is still building their thought versus when they're waiting for a response.

We discovered several key patterns that signal when users are still formulating their thoughts:

"Wait, There's More" signals:

- Messages ending with "and", "also", "but"

- Rapid-fire messages (less than 2 seconds apart)

- Incomplete sentences or sentence fragments

- Words like "wait", "actually", "oh also"

"Respond Now" signals:

- Direct questions ending with "?"

- Commands like "Help me with..."

- Urgent keywords ("emergency", "urgent", "asap")

- Longer pauses (more than 10 seconds)

We learned that users have very different communication styles. Some people send everything in one long message, others break every sentence into separate sends. Some use voice-to-text which creates unusual punctuation patterns. The system needed to be flexible enough to handle all these variations while still providing a natural experience.

Voice interfaces amplified every aspect of this challenge. When users are speaking rather than typing, background noise and natural speech patterns create even more ambiguity about when thoughts are complete. The interruption capability becomes essential because people naturally pause mid-sentence while speaking, and responding to every pause would make conversation impossible.

The Broader Picture

This approach reflects a fundamental shift in how our team thinks about conversational AI. Instead of trying to respond as quickly as possible, we're optimizing for responding at the right time with the right context. It's the difference between being efficient and being effective.

The results speak for themselves: conversation abandonment rates dropped significantly, user satisfaction improved, and support resolution became more effective because agents got better information upfront. More importantly, the interactions started feeling natural in a way that surprised even our own team.

Looking ahead, we think this kind of contextual awareness will become table stakes for any serious conversational AI application. The technology exists today - it's mostly about applying human conversation intuition to agent behavior and being willing to sacrifice response speed for response quality.

The future of conversational AI isn't about responding faster. It's about responding smarter, at the right moment, with the right context. Sometimes the smartest thing to do is wait for the complete picture before trying to help.