Latency is one of those invisible forces that shapes user experience more than most people realize. In everyday life, we rarely think about it. We tap a button, type a message, or speak into a phone, and expect something to happen immediately. But the gap between action and response, known as the latency, is what determines whether an interaction feels natural or frustrating. In healthcare, where patients may already be stressed, that perception becomes critical.

What latency means

By definition, latency is the time delay between a user’s action and the system’s response. In a chat system, it is the time between pressing “send” and seeing a reply appear. In a voice interaction, it is the silence between finishing a sentence and hearing the system’s answer. Humans are surprisingly sensitive to these delays: anything under 100 milliseconds feels immediate. Up to about 250 ms still feels snappy, while delays of half a second or more shift the experience into “I’m waiting.” By the one-second mark, most people consciously notice lag, and beyond that trust begins to erode.

When measuring latency, it’s tempting to focus on a single component such as model inference time, but users don’t care about isolated metrics. They only perceive end-to-end latency: from the moment their message leaves their device, through the channel provider, into your backend, across to the model or tools, through safety guardrails, and back again to the device. For engineers, this means measuring not just averages but distributions. A system that usually responds in 600 ms but occasionally takes four seconds will still feel unreliable. Percentiles such as p90 or p95 tell the real story of user experience (which measure how was the experience/latency of the worst 10% / 5% of cases).

It is also helpful to distinguish between local latency and E2E latency. Local latency measures a single component in isolation, for example, the raw inference time of an LLM. E2E latency is the whole chain the user experiences. Optimizing the first without considering the second often leads to misleading conclusions.

Why latency is so difficult

One reason latency is a persistent challenge is that no single party controls the full pipeline. A digital healthcare assistant, for example, depends on WhatsApp APIs and/or telephony networks, on hospital scheduling systems, on insurance databases, and on your own microservices, not to mention the LLM provider and guardrails. Each of these adds its own overhead, and the slowest link sets the pace.

The complexity is even greater because modern bots rarely just “generate text.” They may call a scheduling API to check availability, query an EMR for lab results, verify insurance coverage, or run through compliance guardrails. Every step adds delay. Patients, however, don’t make allowances for that complexity. They compare your system against the speed of a human receptionist answering the phone. That comparison creates direct SLA pressure: if the bot feels slower than a human, it will be seem as inferior even if it scales to thousands of concurrent conversations. Remember: the patient on the other end does not care if you are talking to thousands of other patients.

And everyone thinks about it. Take Amazon’s finding out that every 100ms in Added Page Load Time costs 1% n revenue and Google’s measuring that 100-400ms added search latency decreases search volume in ~0.5%.

Voice versus text

The way latency is perceived differs dramatically between channels.

In voice, silence feels awkward almost immediately. Conversation has rhythm, and pauses longer than half a second already feel strained. That is why engineers working on voice systems often aim for sub-600 ms p95 for the first spoken word. The bottlenecks are not just the LLM but also endpointing (detecting when the user has finished speaking), automatic speech recognition, and text-to-speech synthesis. Each adds latency, and together they can quickly push you past the point where silence becomes uncomfortable.

Text is more forgiving. Users on WhatsApp or SMS can tolerate around a second without frustration. More importantly, text offers tools for perception management. A typing indicator that appears almost immediately reassures the user that the system is working. Streaming partial responses helps too. A reply that begins streaming at 200 ms and completes in two seconds feels faster than one that appears fully formed after a single second. In both channels, the absolute numbers matter, but the perception is just as important.

In normal human text conversations, a delay is often expected in the order of seconds or even minutes. So WhatsApp/SMS is much more forgiving.

How to improve latency

Improving latency requires attacking it from multiple angles. Each strategy chips away at the total, and together they make the difference between a sluggish and a seamless system.

Do work sooner

The easiest milliseconds to save are the ones you never spend. Systems can start working before the full request is understood.

- Speculative retrieval: As soon as a message comes in, kick off a search of the knowledge base, even before intent classification is finished. In the worst case, the retrieval is discarded. In the best case, the results are already waiting when the LLM asks for them.

- Pre-warmed sessions: Keep LLM contexts alive. A “cold” session may add hundreds of milliseconds of initialization overhead. Maintaining warm sessions trades off compute cost against latency.

- Async context fetch: While the system parses the message, it can already be fetching the patient profile from the database.

Example: if a patient writes, “I need to schedule a cardiology appointment,” the system can already begin retrieving available slots as soon as the keyword “cardiology” appears, rather than waiting for the LLM to fully parse the sentence.

Do work closer

Distance matters. A request that travels from São Paulo to a US-East data center and back can burn 200 ms before any computation even starts.

- Deploy services in the same region as your users.

- Use connection pooling so you don’t pay TCP and TLS handshakes on every request.

- Terminate encryption at the edge where possible, handing traffic off to trusted internal networks.

These optimizations rarely grab attention, but they add up. Network round-trips are latency’s silent killer.

Parallelize tasks

Sequential pipelines are the enemy of speed. Instead of fetching the patient record, then querying the knowledge base, then constructing the LLM prompt, do these in parallel.

For example, when a patient asks, “What were my last lab results?” the system can:

- Begin constructing the LLM prompt.

- Hit the lab API.

- Run retrieval over prior notes.

By the time the LLM is ready to compose a response, the data is already on hand.

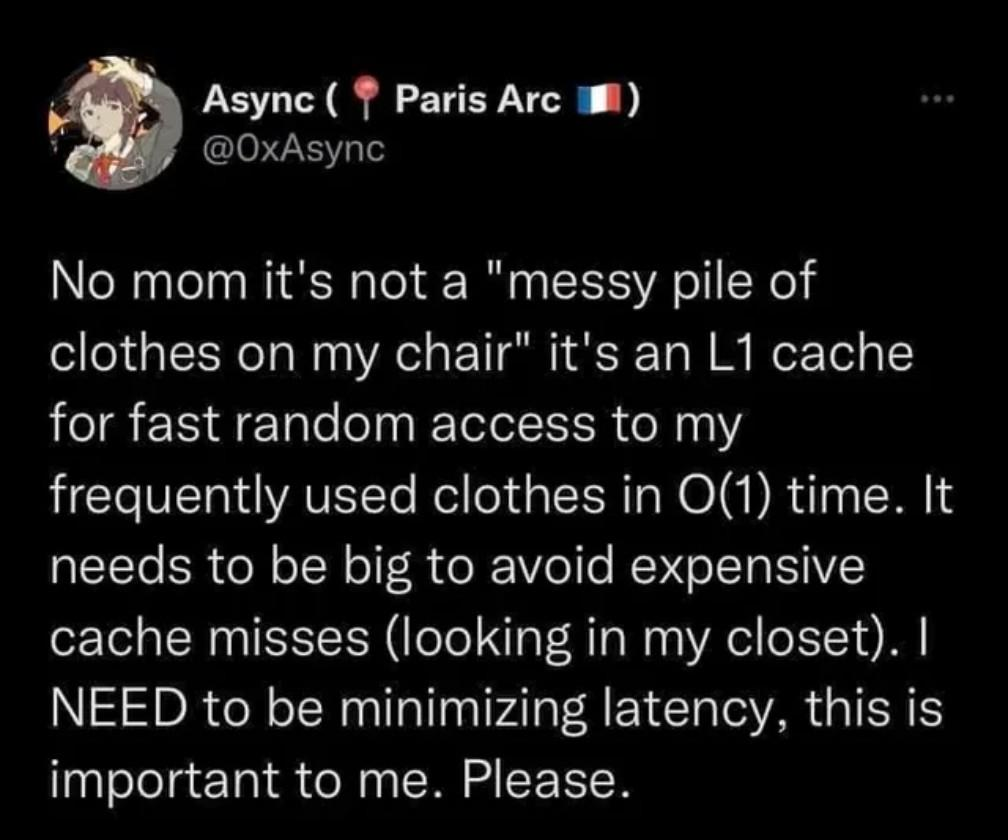

Cache aggressively

Many requests are predictable. Clinic hours, insurance directories, or standard policies don’t need to hit the LLM every time.

- Store deterministic tool outputs.

- Cache frequent intents, especially for high-traffic flows like appointment scheduling.

- Invalidate intelligently when the underlying data changes.

Think of caching not just as a performance optimization but as a correctness strategy: the cached answer to “What are your opening hours?” is likely more reliable than generating it fresh each time.

Lean on Redis

Redis is the backbone of many low-latency systems because it provides in-memory storage with sub-millisecond access.

- Maintain session state so conversations don’t need to reload context from a database.

- Cache hot responses to common intents.

- Use rate limiting to ensure spikes don’t overwhelm downstream systems.

In high-volume healthcare bots, Redis often becomes the “first responder,” answering common queries instantly while slower systems catch up in the background.

Push background work off the hot path

Not all work needs to block the user’s response.

- Audit logging can be written to a durable queue (Kafka, SQS, or Redis streams) and flushed later.

- Analytics are valuable, but running them synchronously only punishes the patient.

The rule is simple: if the user doesn’t need it to move forward, it doesn’t belong on the critical path.

Streamline guardrails and safety checks

Guardrails are essential, especially in healthcare. But they don’t all have to run before any response is shown.

- Apply profanity filters and redactions token-by-token on streaming output.

- Run heavy compliance checks asynchronously, after the first safe part of the answer is already visible.

This turns guardrails from a blocker into a companion process, cutting seconds without sacrificing safety.

How to hide latency

Even with all the engineering in the world, some delays will remain. The good news is that perception can be managed.

> Humans don’t measure milliseconds; they interpret cues.

Text channels

In chat interfaces, visual feedback is powerful. The WhatsApp typing indicator is a perfect example. If it appears immediately after the user sends a message, the user relaxes—even if the real answer takes 800 ms to arrive. Short micro-acknowledgments like “Got it, checking availability…” serve the same purpose. They assure the patient that the system is engaged and working, not frozen.

Streaming responses are another tool. Even if the full reply takes two seconds to generate, starting the output stream at 200 ms changes the perception completely. To the user, the system feels alive, not lagging.

Voice channels

Voice is trickier because silence is socially charged. Here, conversational gap-fillers become the equivalent of typing indicators. Short utterances like “Okay,” “Let me check…” or even a subtle breath sound help maintain conversational rhythm. These fillers don’t just hide latency—they make the system feel more human.

Some teams experiment with pre-roll audio, such as a small “thinking” sound, a “typing” sound or a deliberate “hmm.” Used sparingly, these tricks buy half a second of goodwill that would otherwise feel like awkward silence.

Why hiding works

The key is that perception is not linear. A system that always responds in 700 ms but feels dead during that time seems slower than one that responds in 1.2 seconds but streams output or uses cues. Users care about whether the conversation feels alive, not about stopwatch numbers. The best systems optimize both reality and perception in tandem.

Continuous improvement

Latency optimization is not a one-off project. Systems evolve, integrations multiply, and bottlenecks shift. Continuous measurement and iteration are essential. A good starting point is to focus on the most common user flows, the top thirty intents that account for the majority of traffic, and optimize those first. Speculative parallelism can also make a difference, since a lightweight intent model might predict that a scheduling query is coming, so the system can hit the scheduling API in parallel while the LLM is still deciding. When the LLM responds, the data is already in hand.

Equally important is to establish service level objectives with explicit targets, such as “95 percent of voice responses under 600 ms.” Pair these with dashboards and alerts so regressions are caught early. Latency histograms published per channel help teams see the real distribution of user experience rather than being lulled by averages.

Closing reflections

Latency is not only a technical measure but also a psychological one. Patients do not know or care whether you are waiting on Redis, a scheduling API, or an LLM. They only feel delay or the lack of it. The systems that win are not necessarily the ones with the lowest raw milliseconds, but those that manage both the technical pipeline and the human perception of responsiveness. In healthcare, that means building trust with software that feels attentive, immediate, and reliable, even when the underlying reality is complex.

All that patients want is to get their problems solved. To feel heard. No one enjoys calling their doctor’s office, waiting for 15 minutes listening to a boring background song, and never even getting their appointment. We are solving for how to make that latency go from hours, even days, into seconds and milliseconds. All with natural conversations and seamless integrations.